Introduction

When we think of microsurgery or micromanipulation, the first image that often comes to mind is a surgeon performing delicate operations within a very small area. These procedures typically demand extremely high technical skills and steady hand control, as surgeons must manipulate tiny organs, blood vessels, or nerves within a limited field of view. However, the microscopic 3D vision system developed during my doctoral research introduces a groundbreaking application that offers unprecedented precision, safety, and cognitive enhancement for minimally invasive surgery and micromanipulation.

What is a 3D Microscopic Vision System? It is an innovative technology that combines machine 3D vision with binocular microscopy. The primary goal is to provide surgeons with a clear, three-dimensional view of the surgical field, enabling them to observe the target from various angles and plan the next steps more precisely and safely. Additionally, the point cloud data generated by the system can be integrated into a navigation system. Through the use of proprietary artificial intelligence algorithms and advanced visualization technologies, this navigation system provides unparalleled cognitive assistance based on machine 3D vision, helping surgeons quickly understand and perceive the structural and state information of the object they are operating on.

How Does the 3D Microscopic Vision System Assist in Surgery or Micromanipulation?

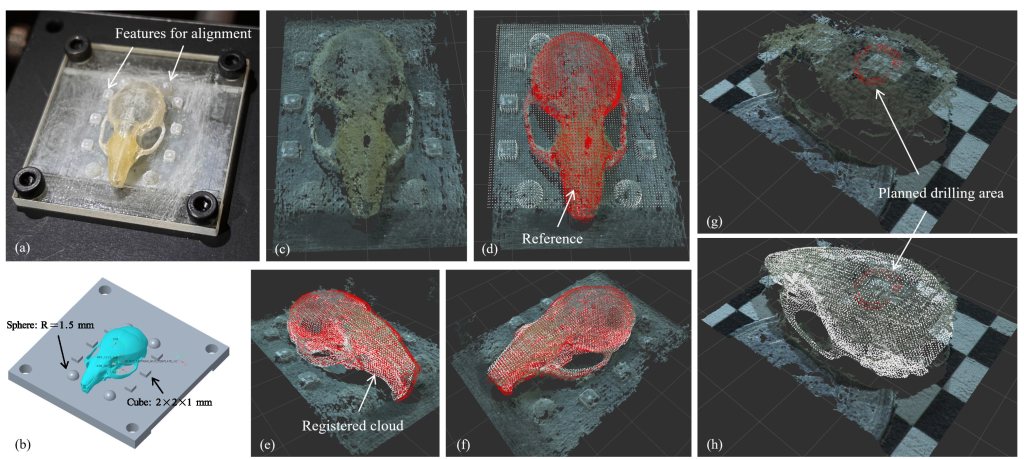

First, the 3D microscopic vision system can generate high-precision point cloud data of the object’s surface, as illustrated in the images and videos below.

The video demonstrates real-time 3D visual feedback during a mouse craniotomy procedure. In this surgery, a circular window is drilled into the mouse’s skull using a micro drill, with the thickness of the skull in this area being around 0.27 to 0.33 mm. Subsequent steps in the procedure include implanting a mini organ into the brain tissue, sealing the cranial window with a transparent glass cover, and observing the growth of the mini organ. The greatest challenge lies in assessing the completeness of the circular cranial window. Due to the thinness of the skull tissue, it tends to exhibit semi-transparency. Additionally, the force or vibration feedback when handling the micro drill is too subtle relative to the drill’s weight, making it difficult to use as a reliable reference. These two factors present significant challenges for both robotic remote control and automated operations.

The development of the 3D microscopic vision system not only enables the visualization of depth information, which is absent in traditional 2D microscopic images, but also clearly reflects the displacements resulting from tissue deformation. These two types of information are often overlooked by the operator during surgery but are critical for the navigation system to address robotic calibration, preoperative medical imaging registration, and the estimation of the target object’s condition. During my doctoral research, I focused on fully leveraging depth information in microsurgery or micromanipulation, and multiple high-level papers have already been or are being prepared for publication based on these results.

The current prototype system is capable of real-time intraoperative imaging at 30 Hz with an accuracy of 0.10 mm. It meets the design requirements for both remote control of microrobots and the needs of navigation systems. Experimental results demonstrate that the system outperforms any other prototype 3D microscopic system worldwide in terms of real-time performance and precision, showcasing a high level of technical advancement and promising application potential.